I have self hosted an openreplay instance on AWS, i have used an AWS Load Balancer to handle SSL.

he DNS provider is cloudflare ( i am aware of the proxy-body-size bug and have changed it to 40m from a default value of 10m)

Here is the output of openreplay -s

This machine is AMD (x86_64) architecture.

�[0;32m[INFO] Using KUBECONFIG /etc/rancher/k3s/k3s.yaml �[0m

�[1;37m- Checking OpenReplay Components Status �[0m

�[0;32m[INFO] OpenReplay Version �[0m

v1.22.0

�[0;32m[INFO] Disk �[0m

Filesystem Size Used Avail Use% Mounted on

/dev/root 49G 11G 38G 23% /

�[0;32m[INFO] Memory �[0m

total used free shared buff/cache available

Mem: 7.6Gi 3.0Gi 237Mi 35Mi 4.4Gi 4.3Gi

Swap: 0B 0B 0B

�[0;32m[INFO] CPU �[0m

Linux ip-172-31-25-181 6.8.0-1024-aws #26~22.04.1-Ubuntu SMP Wed Feb 19 06:54:57 UTC 2025 x86_64 x86_64 x86_64 GNU/Linux

PRETTY_NAME="Ubuntu 22.04.5 LTS"

CPU Count: 2

�[0;32m[INFO] Kubernetes �[0m

Client Version: v1.25.0

Kustomize Version: v4.5.7

Server Version: v1.31.5+k3s1

�[0;32m[INFO] Openreplay Component �[0m

NAME READY STATUS RESTARTS AGE

alerts-openreplay-69f6c7f9f9-d9xj4 1/1 Running 0 27m

analytics-openreplay-5685997559-jt86p 1/1 Running 0 38m

assets-openreplay-78b97499fc-9kq8p 1/1 Running 0 27m

assist-openreplay-6f695dbbff-gpn6v 1/1 Running 0 27m

canvases-openreplay-598f5bc59f-9z9dl 1/1 Running 0 27m

chalice-openreplay-68d66c989b-ncltb 1/1 Running 0 27m

databases-migrate-b2tqg 0/3 Completed 0 39m

db-openreplay-5885487d77-7vpjq 1/1 Running 0 27m

ender-openreplay-866cb56c79-hfhjp 1/1 Running 0 27m

frontend-openreplay-766fbd9894-j4tb6 1/1 Running 0 38m

heuristics-openreplay-b6967c66d-9xnrt 1/1 Running 0 38m

http-openreplay-d86f9c599-mqg98 1/1 Running 0 26m

images-openreplay-845c6f766f-hpqrz 1/1 Running 0 26m

integrations-openreplay-5b6889c97b-fctlc 1/1 Running 0 26m

kyverno-admission-controller-d574d8d8b-mcrq4 1/1 Running 0 40m

kyverno-background-controller-765fbfdc56-cjbm2 1/1 Running 0 40m

kyverno-cleanup-admission-reports-29130410-cprcn 0/1 Completed 0 5m58s

kyverno-cleanup-cluster-admission-reports-29130410-768q5 0/1 Completed 0 5m58s

kyverno-cleanup-controller-7588887798-fh96f 1/1 Running 0 40m

kyverno-reports-controller-7f8c64fdb-l5982 1/1 Running 0 40m

openreplay-ingress-nginx-controller-76c757d59b-649zj 1/1 Running 0 38m

sink-openreplay-854564f4f-zwlng 1/1 Running 0 38m

sourcemapreader-openreplay-66dd84f7fd-kxs5j 1/1 Running 0 26m

spot-openreplay-5d6cff7986-2qhg9 0/1 CrashLoopBackOff 9 (3m44s ago) 26m

storage-openreplay-58785554b6-96cfl 1/1 Running 0 26m

NAME READY STATUS RESTARTS AGE

databases-clickhouse-0 2/2 Running 0 40m

minio-578695cdbd-9xpzf 1/1 Running 0 40m

postgresql-0 1/1 Running 0 40m

redis-master-0 1/1 Running 0 40m

�[0;32m[INFO] Openreplay Images �[0m

alerts-openreplay-69f6c7f9f9-d9xj4 public.ecr.aws/p1t3u8a3/alerts:v1.22.0

analytics-openreplay-5685997559-jt86p public.ecr.aws/p1t3u8a3/analytics:v1.22.0

assets-openreplay-78b97499fc-9kq8p public.ecr.aws/p1t3u8a3/assets:v1.22.0

assist-openreplay-6f695dbbff-gpn6v public.ecr.aws/p1t3u8a3/assist:v1.22.4

canvases-openreplay-598f5bc59f-9z9dl public.ecr.aws/p1t3u8a3/canvases:v1.22.0

chalice-openreplay-68d66c989b-ncltb public.ecr.aws/p1t3u8a3/chalice:v1.22.7

databases-migrate-b2tqg bitnami/postgresql:16.3.0 bitnami/minio:2023.11.20 clickhouse/clickhouse-server:22.12-alpine

db-openreplay-5885487d77-7vpjq public.ecr.aws/p1t3u8a3/db:v1.22.1

ender-openreplay-866cb56c79-hfhjp public.ecr.aws/p1t3u8a3/ender:v1.22.0

frontend-openreplay-766fbd9894-j4tb6 public.ecr.aws/p1t3u8a3/frontend:v1.22.35

heuristics-openreplay-b6967c66d-9xnrt public.ecr.aws/p1t3u8a3/heuristics:v1.22.0

http-openreplay-d86f9c599-mqg98 public.ecr.aws/p1t3u8a3/http:v1.22.0

images-openreplay-845c6f766f-hpqrz public.ecr.aws/p1t3u8a3/images:v1.22.0

integrations-openreplay-5b6889c97b-fctlc public.ecr.aws/p1t3u8a3/integrations:v1.22.0

kyverno-admission-controller-d574d8d8b-mcrq4 ghcr.io/kyverno/kyverno:v1.10.0

kyverno-background-controller-765fbfdc56-cjbm2 ghcr.io/kyverno/background-controller:v1.10.0

kyverno-cleanup-admission-reports-29130410-cprcn bitnami/kubectl:1.26.4

kyverno-cleanup-cluster-admission-reports-29130410-768q5 bitnami/kubectl:1.26.4

kyverno-cleanup-controller-7588887798-fh96f ghcr.io/kyverno/cleanup-controller:v1.10.0

kyverno-reports-controller-7f8c64fdb-l5982 ghcr.io/kyverno/reports-controller:v1.10.0

openreplay-ingress-nginx-controller-76c757d59b-649zj registry.k8s.io/ingress-nginx/controller:v1.5.1@sha256:4ba73c697770664c1e00e9f968de14e08f606ff961c76e5d7033a4a9c593c629

sink-openreplay-854564f4f-zwlng public.ecr.aws/p1t3u8a3/sink:v1.22.0

sourcemapreader-openreplay-66dd84f7fd-kxs5j public.ecr.aws/p1t3u8a3/sourcemapreader:v1.22.0

spot-openreplay-5d6cff7986-2qhg9 public.ecr.aws/p1t3u8a3/spot:v1.22.0

storage-openreplay-58785554b6-96cfl public.ecr.aws/p1t3u8a3/storage:v1.22.0

databases-clickhouse-0 alexakulov/clickhouse-backup:latest clickhouse/clickhouse-server:25.1-alpine

minio-578695cdbd-9xpzf docker.io/bitnami/minio:2023.11.20

postgresql-0 docker.io/bitnami/postgresql:17.2.0

redis-master-0 docker.io/bitnami/redis:7.2

And here is the output of

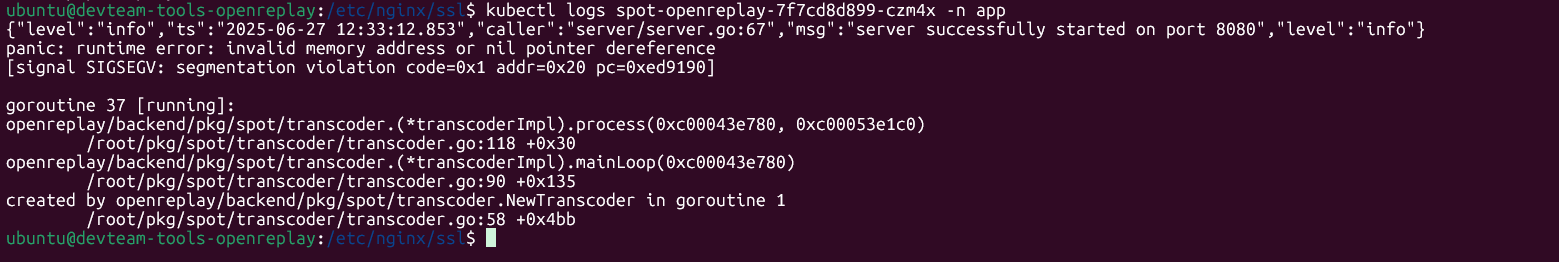

kubectl logs spot-openreplay-5d6cff7986-2qhg9 -n app

{"level":"info","ts":"2025-05-21 11:02:30.208","caller":"server/server.go:67","msg":"server successfully started on port 8080","level":"info"}

panic: runtime error: invalid memory address or nil pointer dereference

[signal SIGSEGV: segmentation violation code=0x1 addr=0x20 pc=0xed9190]

goroutine 42 [running]:

openreplay/backend/pkg/spot/transcoder.(*transcoderImpl).process(0xc0003b00a0, 0xc0004a8d20)

/root/pkg/spot/transcoder/transcoder.go:118 +0x30

openreplay/backend/pkg/spot/transcoder.(*transcoderImpl).mainLoop(0xc0003b00a0)

/root/pkg/spot/transcoder/transcoder.go:90 +0x135

created by openreplay/backend/pkg/spot/transcoder.NewTranscoder in goroutine 1

/root/pkg/spot/transcoder/transcoder.go:58 +0x4bb

Spot actually works for a while after openreplay -i DOMAIN_NAME but the after a few minutes it crashes.

I would greatly appreciate any help regarding this.

Thank you.